How Google’s DS-STAR turns natural language questions into verified answers over raw data files - plus my open-source implementation.

I’m taking a couple weeks off between jobs right now, and wanted to keep the brain in gear (relatively speaking). I’m also a data scientist who’s into agentic workflows and more than a little paranoid they’ll eat our lunch. So instead of doom-scrolling, I spent some time with Google’s DS-STAR paper and turned it into something you can run.

Here’s the hook: Let’s say you’ve got a folder of CSVs, a JSON dump, or a mix of semi-structured files. Someone asks: “What was our top-selling product last quarter?” or “Which regions had the highest churn?” Today, that usually means writing some code, debugging it, trying a few iterations, and only then getting an answer. What if an agent could do the exploration, coding, and verification for you?

Google’s DS-STAR (Data Science Agent via Iterative Planning and Verification) tries to do exactly that. I’ve open-sourced a Python implementation so you can run it on your own data with Gemini or OpenAI models.

What DS-STAR Does

DS-STAR answers factoid questions over structured or unstructured data. You give it:

A question (e.g. “What’s the average order value by region?”)

A data directory (CSV, JSON, Markdown, etc.)

Optional guidelines for how you want the final answer formatted

It returns a concrete answer plus the code and reasoning it used to get there, no hand-written code required.

How It Works Under the Hood

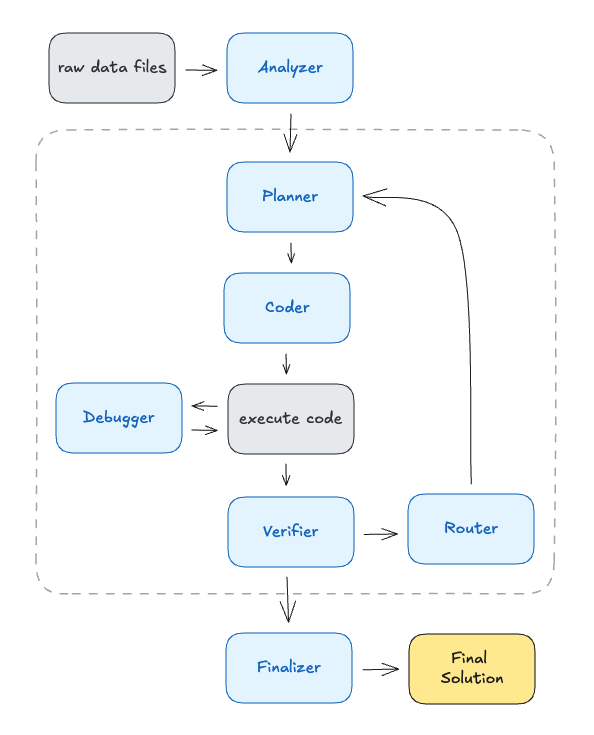

The pipeline is a multi-agent loop:

Analyzer: Explores your raw data files and builds summaries so downstream agents know what’s available.

Planner → Coder → Execute → Debugger → Verifier: Plans a solution, generates code, runs it, fixes errors, and checks that the result actually answers the question. If verification fails, the Router decides the next step and the loop continues.

Finalizer: Turns the verified result into a clear, formatted answer (e.g. markdown, bullet points).

So you get planning, code execution, and verification in one system, closer to how a data scientist would work than to a one-shot LLM answer.

Selling Points For Data Scientists

Point and ask: Point at a data directory and ask in plain language; exploration of raw data files is built-in.

Auditable: You get the code that produced the answer and logs of the plan and verification steps.

Flexible: Works with mixed file types and supports both Gemini and OpenAI backends.

Try It Yourself

Install with Poetry, point it at your data directory and output directory, and call run_analysis() with your question. The repo includes a minimal example and a DABstep-based example for harder, multi-step tasks.

Implementation (Python, Gemini + OpenAI):

https://github.com/jonathan-hsiao/DS-STAR

Paper:

DS-STAR: Data Science Agent via Iterative Planning and Verification (arXiv)

If you use it on your own datasets or extend it, I’d love to hear how it goes. Drop a note in the repo discussions or reach out.