How I built an MCP server plus a thin chat client (CLI) to answer natural-language questions using page-cited evidence from ingested rulebook PDFs.

Coming up on the end of my brief fun-employment, here is the last of three projects I gave myself to ship over the break (check out my recent posts for the other two if you’re interested).

The goal of this third project was: build a tiny MCP for a niche use case. Something lightweight, useful, and a good vehicle to learn the Model Context Protocol. I landed on a board game rules referee agent: drop in raw rulebook PDFs, ask questions in plain English, and get evidence-backed answers with page citations.

I called it Gamemaster. This post is a short tour of what it does, how it’s wired (high-level, then MCP, then retrieval), and a few decisions along the way.

What It Does (and a Demo)

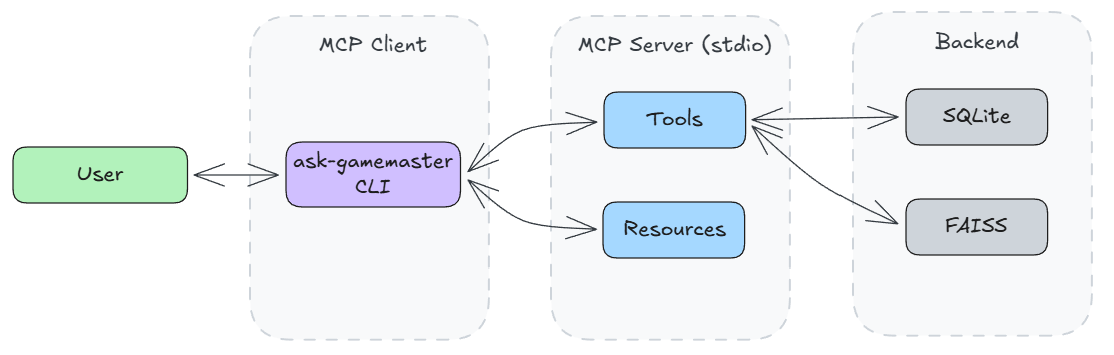

Gamemaster is two pieces:

An MCP server - Exposes tools over stdio (list games, list sources, search rules, get chunks, ingest PDFs). Includes instructions and resources so any MCP client can use it.

A thin CLI client - Spawns the server, runs a small agent (LLM + tool loop), and lets you chat in the terminal.

You put PDFs in a folder (rulebooks/<game_id>/<filename>.pdf, ingest t hem (manually or via the agent), then ask things like “Can I play a bird card and activate a power in the same turn?” Answers are grounded in retrieved evicence with citations.

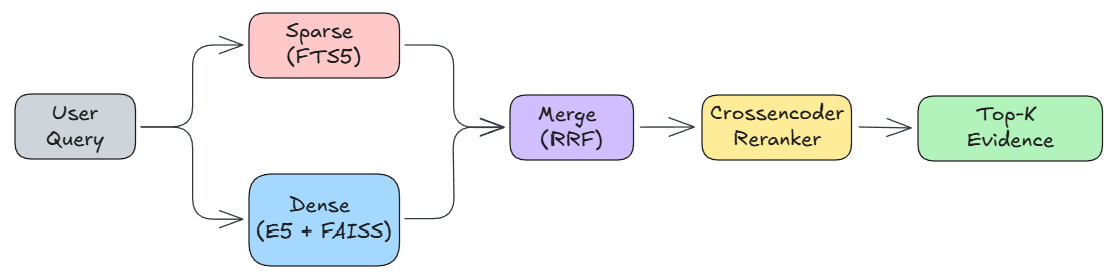

Retrieval uses a hybrid strategy (sparse with SQLite FTS5 + dense with FAISS, merge using RRF) along with a small cross-encoder reranker.

Demo

High-Level Shape

The MCP server owns the rules store (DB + index) and all tool logic; the client just orchestrates the conversation and tool calls. Very standard stuff here.

Why MCP?

This use case is a great MCP fit because:

Answering board game rules questions reliably is a multi-step, tool-driven workflow: identify the right game/source, run search, pull supporting chunks, resolve ambiguities, and return a cited answer.

The agent benefits from structured tools and iterative reasoning, not a single “call-and-respond” API.

MCP gives you a standard, reusable tool surface (ingest/search/get-chunks/list-sources) that any client can plug into.

MCP Architecture + Some Decisions

The server is built with FastMCP. It listens over stdio (no HTTP), so clients start it as a subprocess and talk over stdin/stdout. That keeps deployment simple (almost trivial).

Tools

The server exposes seven tools:

list_games, list_sources: What’s in the store (games, PDFs)

search_rules: Retrieve evidence chunks with citations and scores. Retrieval uses hybrid strategy (sparse FTS5 + dense FAISS) with a small cross-encoder reranker

get_chunks: Full text for a set of chunk IDs (evidence for LLM)

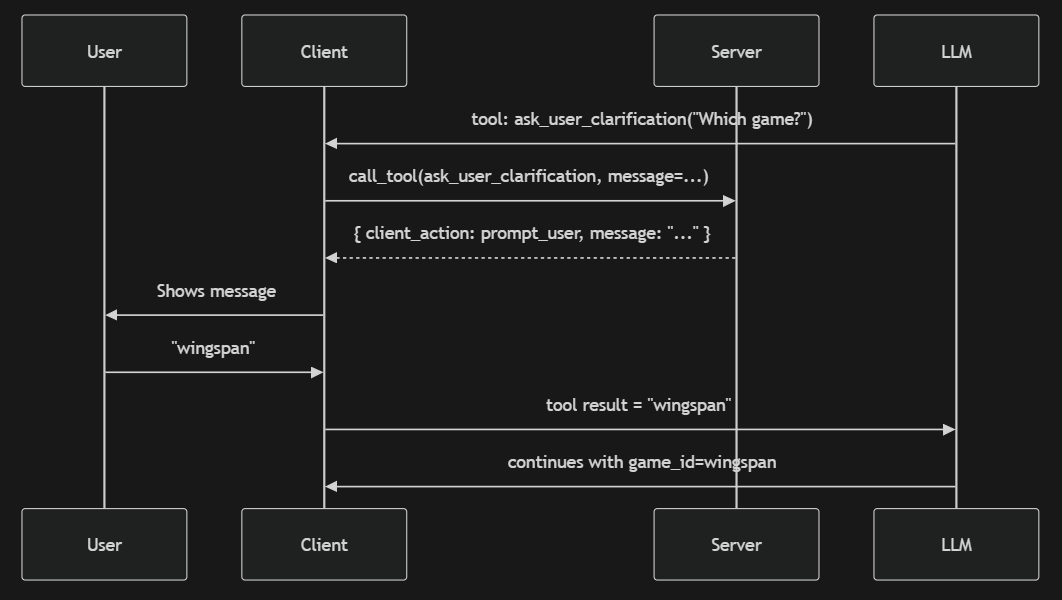

ask_user_clarification: Wrapper for request-for-clarification message, so agent and client can share a strict protocol for "ask user and pass reply back as tool result"

ingest_pdf, ingest_pdfs: Ingest one or many PDFs. Extract text, chunk by page/section, write to SQLite (FTS5), embed, and add to FAISS index for hybrid search.

Why ask_user_clarification as a tool?

With a dedicated tool, the contract is clear: agent calls the tool with a message -> client prompts the user -> client returns the reply as the tool result. Turn boundaries stay clean and the model doesn’t have to infer that “wingspan” was the answer to the previous question.

Resources + Instructions

The server’s short instructions rely on resources for clean organization and essentially say “read resource X when you do Y.”

The following resources are specified:

ingest_instructions: how to add/ingest rulebooks and guide user through the upload process

question_answering_instructions: workflow, core principles, and guardrails for answering questions

clarification/game: template for asking user to clarify game

clarification/source: template for asking user to clarify sources

Retrieval Architecture: Sparse, Dense, Merge, Rerank

Rulebooks are chunked (by page/section), stored in SQLite with FTS5, and embedded for dense search. A single search_rules call runs the full pipeline:

Sparse: SQLite FTS5. User query is turned into an OR of terms (with prefix matching for longer tokens), and used to search a single FTS table filtered by game_id/source. Top-k by BM25 score.

Dense: e5-small-v2 with the usual prefix convention: query

: ...for the user query, passage: ...for chunk text at index time. FAISS with inner-product on normalized vectors (IndexFlatIP + IndexIDMap2). Search returns (chunk_id, score) for the given game.Merge: Top-k from sparse and top-k from dense are merged using reciprocal rank fusion (RRF) - rank positions from each list, combine with 1/(k+rank), sort by combined score.

Rerank: Take the top candidates from the merge, run a small cross-encoder (

ms-marco-MiniLM-L-6-v2) on (query, chunk text), and return the top-k by rerank score.

Wrapping Up

This was a fun one - learned a ton about MCPs in the process, but would love to hear what I got wrong, or how you’d extend or simplify it.

As I said at the start, my fun-employment is coming to an end (new gig is starting next week). Had a blast tinkering and writing over the last few weeks - if you followed along with my last couple posts, thanks for reading! Hope I’ll be back before long, but for now - shutting down for my final week of freedom.